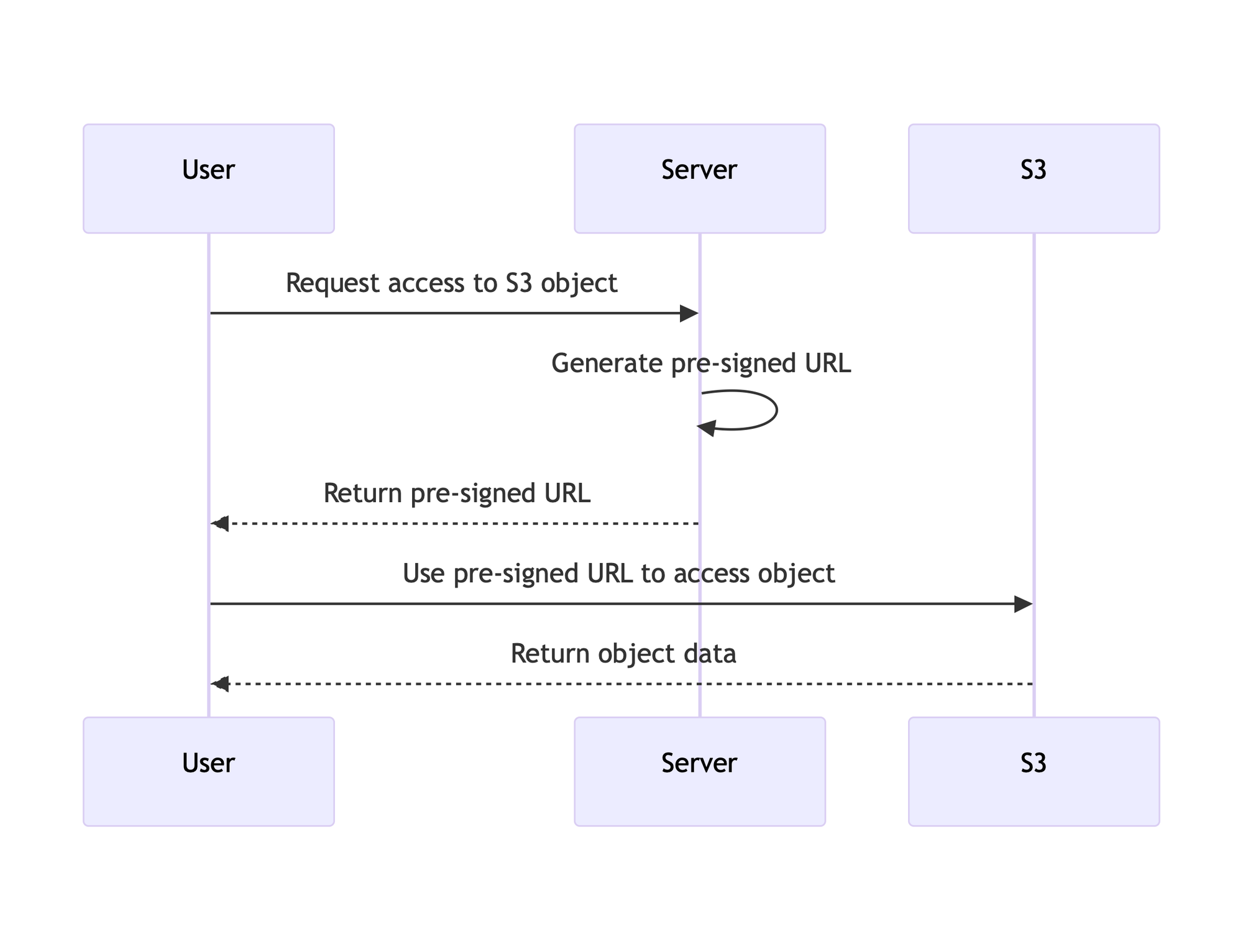

Consider the following: You have an S3 bucket where users upload data. Sometimes other users have to download the data but it's sensitive information. So, like a smart engineer you have the policy set to private and you'll generate a pre-signed URL to ensure that it's only accessible for a few minutes while the user downloads it.

A week, month, or even year later there are reports that your org has leaked sensitive data from that bucket for anyone to download. You now have the chance to get to know your org's legal team quite well.

Is this scenario plausible? Are the objects only available for the time frame you specify? What can you do to mitigate this risk?

What are Pre-Signed S3 URLs?

Pre-signed URLs are a pretty cool feature from the S3 team. The concept is simple.

For downloads, you have a private object you want to grant access to, you generate a special URL that allows access to the object for a set amount of time.

For uploads, you can specify the bucket and object name and create a URL that can be used in a PUT request to upload an object to that bucket.

The user uploading or downloading the object doesn't require any special permissions or roles to transfer the object and removes the need for complicated object resource policies that are likely to have errors in it.

For the sake of the rest of this write-up, I'll be talking about pre-signed URLs for downloading objects.

How to Generate Your Own Pre-Signed URL

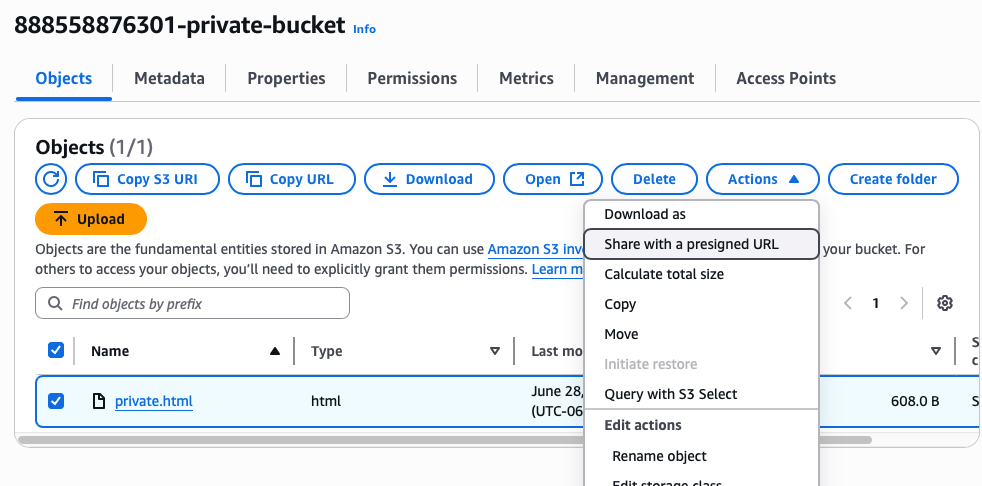

You can generate a pre-signed URL a few ways. You can even create one in the S3 console. All you do is select the object, click "Actions", and then click "Share with pre-signed URL"

Generating through an SDK is just as simple. As an example, in the Ruby SDK:

require 'aws-sdk-s3'

s3_client = Aws::S3::Client.new(region: ENV['AWS_REGION'])

presigner = Aws::S3::Presigner.new(client: s3_client)

url = presigner.presigned_url(

:get_object,

bucket: '888558876301-private-bucket',

key: 'private.html',

expires_in: 900

)

puts urlPretty much the simplest implementation of pre-signed URL creation.

The snippet above will print out your pre-signed URL: an obscenely long URL with multiple query strings such as X-Amz-Security-Token, X-Amz-Credential, and X-Amz-Algorithm to name a few.

After you generate your pre-signed URL, the clock begins ticking. In this case, the link is valid for 900 seconds or 15 minutes.

The Risk: Unintended Exposure

So, in the opening scenario you have an app generating these URLs and distributing them. Maybe the user knows it's a pre-signed URL, maybe not.

The Wayback Machine can archive single pages on request. However it's been observed that when a domain has been submitted for archiving enough times, Archive.org may crawl it automatically. You may also experience crawling due to site popularity or inclusion on lists such as the Alexa Top 1M list.

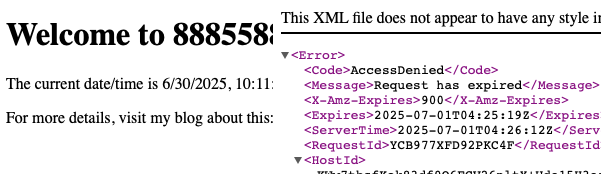

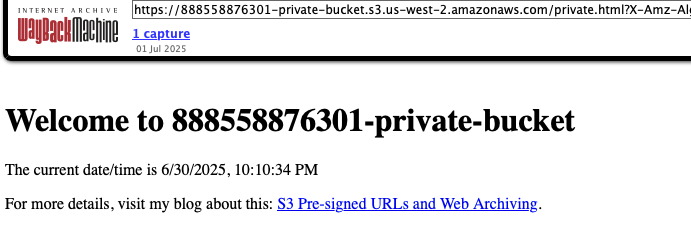

So if the Wayback Machine happens to archive your pre-signed URL before it expires, perhaps because you chose a long expiry time or just bad luck, you may end up in a scenario such as the below.

These screenshots show the original link and the expired request. You can still check this out here. The Then the next screenshot is what Wayback Machine has for the URL, which you can also check here.

Now imagine instead of a simple HTML page, the archived page was a vulnerability report, financial details, or other sensitive doc.

Mitigation Options and Alternatives

So what can you do about this? The first thing to do is to go around to the various archive sites and request a takedown and block for the domain. This is well documented on Wayback Machine, your mileage may vary with other archiving sites.

The initial thought after that would be to ensure crawlers are appropriately blocked or restricted using tried and true methods like headers or robots.txt. Unfortunately, many crawlers including the Wayback Machine, have stopped respecting these.

Another method to mitigate this requires some additional development work but is more reliable and secure. You want to institute some extra layer of check for the retrieval of the object. There are a few flavors of this. For example, you can implement an allow list that is specified at the link creation. Another way is to require the viewer to perform an additional authorization check such as verifying their email or entering a code to view. The drawback of this approach is that time is limited and for most cases, it's a hard sell to leadership that more development time is needed when pre-signed URLs might just be "good enough".

Wrap Up

Is there a good time and place to use pre-signed URLs? Just like with most technology, there absolutely is. It's up to the product and security teams to figure out what the right balance of availability, ease of implementation, and security needs to be achieved.

Overall, I thought this was an interesting exposure risk that wasn't immediately on my mind when I've seen uses of pre-signed URLs. I don't think there is anything wrong with the tech or feature, but awareness is a lot of our jobs. Perhaps I'm the only one that hadn't thought of this risk, but just in case I'm not I wanted to share my thoughts on the off chance it might help someone.