A tale of why keeping up in the industry is critical

TLDR

- When encrypting at scale, customer-managed keys in KMS can be very costly. Using bucket keys will reduce the calls to KMS, which will reduce costs.

- The implementation of bucket keys changed the cost from $1,500 per day in just KMS requests to $300 per day ($36,000 per month!)

I want to share a story that might help others from a technical perspective but also could encourage a thought that just because everything is running, there is still reason to continue to improve on existing infrastructure all of the time:

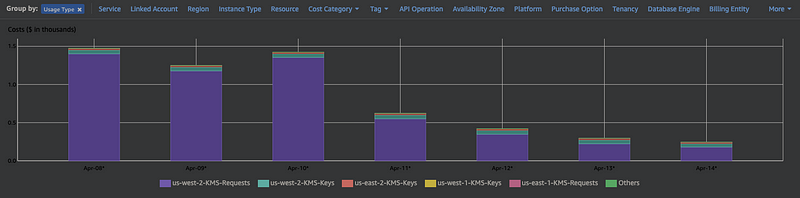

You get an alert from your finance team. Last month’s AWS bill had an unexpected spike in cost. You pull up cost explorer and immediately see the increase. When you dig in, you see a spike in the expenses to KMS. It crept up from the average of a few hundred to a few thousand dollars per day.

It’s not the end of the world, but you need to figure it out. You’ll investigate it when you have time between different projects.

A few days later, you get a message in Slack: “We’re hitting our KMS limit in production.”

If you’ve never looked, the default rate limit for symmetric cryptographic requests in KMS is 50,000 requests/second.

Your cost issue just became a production incident though.

The incident itself isn’t too significant, but the discovery of what was happening was.

What Was Making 50,000 KMS Requests per Second?

The KMS monitoring isn’t great. Cloudwatch doesn’t even split the operations, so you can’t get the rates of just kms:decrypt calls. The monitoring overview page of the KMS developer guide briefly hints at this:

AWS KMS API activity for data plane operations. These are cryptographic operations that use a KMS key, such as Decrypt, Encrypt, ReEncrypt, and GenerateDataKey.

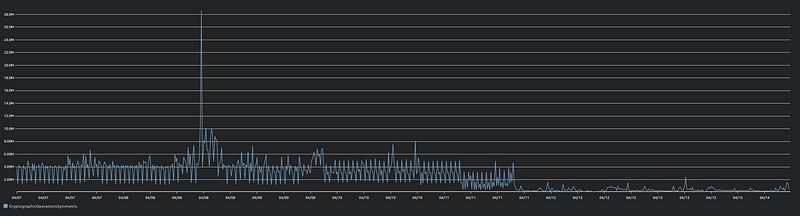

So when looking at CloudWatch you see a CryptographicOperationsSymmetric metric under All > Usage > By AWS Resource.

Fortunately, we had a time frame: the spikes were happening every hour, on the hour. With nothing standing out in the applications, we turned to Cloudtrail logs. The logs showed us an abnormally large amount of requests from our Quicksight service role to KMS, with the encryption context being our data warehouse.

That presented a wrinkle, we needed to maintain the freshness of our SPICE datasets, but we weren’t given too much in the way of options. So the team was able to perform some mitigation by spreading out the refreshes; we shouldn’t hit the rate limit anymore. We were still seeing hundreds of millions of requests to KMS daily, which is expensive based on current prices:

500,000,000 requests/day * $0.03 per 10,000/requests = $1,500 per day

Enter S3 Bucket Keys

In December of 2020, Amazon introduced S3 Bucket Keys. Advertised as a method to reduce requests to KMS (and the associated costs).

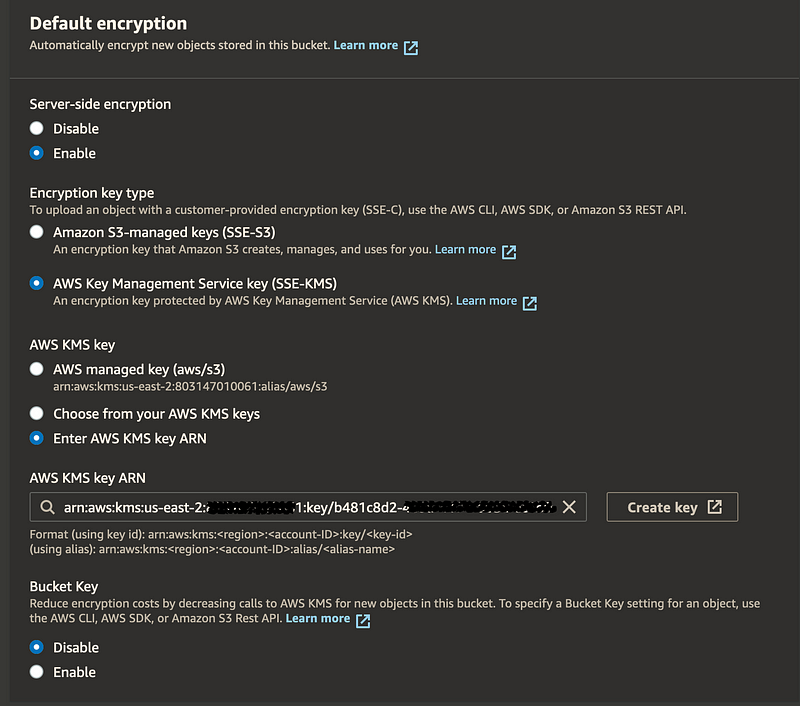

The implementation is simple. In AWS, it’s a simple check box:

In Terraform, it’s equally simple using the aws_s3_bucket_server_side_encryption_configuration resource:

resource "aws_s3_bucket_server_side_encryption_configuration" "example" {

bucket = aws_s3_bucket.mybucket.bucket

rule {

apply_server_side_encryption_by_default {

kms_master_key_id = aws_kms_key.mykey.arn

sse_algorithm = "aws:kms"

}

bucket_key_enabled = true

}

}The above solved requests for new objects in the future; however, the nature of our data warehouse was that we were fetching the entire data set on each refresh and the bucket contained terabytes of data encrypted with the old key. This revelation meant we were still seeing millions of KMS requests. AWS calls this out in the S3 Bucket Key documentation:

When you configure an S3 Bucket Key, objects that are already in the bucket do not use the S3 Bucket Key. To configure an S3 Bucket Key for existing objects, you can use a COPY operation

So the team used an S3 Batch Operation to re-encrypt the data within a few hours. The effect was noticeable immediately. Looking back, it’s even more distinct:

The Results

With essentially a one-line code change and a straightforward batch operation, we reduced our KMS requests from, on average, 500M requests per day to 38M requests per day. The bill showed similar results, $1,500 per day back to $300 per day in KMS requests:

This Story is Never Finished

The production incident was resolved, accounting would be happy, but the story isn’t finished.

The story is never finished.

We live in a cycle of continuous improvement.

As long as Amazon, Microsoft, and the rest of the industry continue to iterate and improve, it will fall onto the engineering staff of companies to keep their knowledge relevant and constantly improve. Our implementation and feedback to the industry feed the next big release.

It’s easy to fall into feature factory mode, where you only track new code and features going into the environment. It’s essential to take a step back and consider the larger picture sometimes. How has the landscape changed since you created that CloudFront distribution? What new features can be leveraged to improve engineering productivity and customer experience? It depends on the engineering leadership to guide and mentor the rest of the staff in these thoughts and approaches. It won’t only benefit the company but also you, your engineers, and others with whom you share the learning.