Testing your infrastructure as code is just as important as testing your application code. And it doesn’t have to be a nightmare!

TLDR

- With a combination of LocalStack and GitHub Actions, you can do easy and effective testing for most of your Terraform code

- This method reduces external dependencies on libraries and knowing best practices for programming languages like Ruby and GoLang.

- Given this is essentially a mocked AWS service, there are limitations in the service offering.

- See demo repo here: mencarellic/terraform-aws-module

When I first saw production Terraform code, it was a single repository split into three directories: one for the production account, one for a development account, and one for the modules. Fast forward a few years, and I’m now with a new company with a similar format, except we use two clouds and have 18 different environments across those clouds.

When I checked earlier, our modules sub-directory was 38,312 lines across 57 modules. Needless to say, we’re in the midst of moving from using a single repo to using a repo per module and leveraging Terraform Cloud’s Private Registry. Now the question is: when I push changes to a module, how do I ensure that module will validate, plan, and apply successfully without too much interruption in the release workflow. The validate and plan are pretty straightforward; I can test it locally with a CLI validate and plan. Applies are trickier, and no one wants to debug Terraform failures while the rest of the team is trying to publish their changes.

There are plenty of patterns, such as using sandbox or testing accounts and Terraform test frameworks like Kitchen or Terratest. But I don’t want to introduce another account and I definitely don’t want to introduce more code to manage. That’s where LocalStack fits in.

LocalStack

LocalStack self-describes in their GitHub repo as: “A fully functional local AWS cloud stack…” It is a feature-rich AWS service that runs in a container with pretty wide coverage. The full list of services can be found here: AWS Service Feature Coverage. The community version contains a reasonable number of services that can cover a lot of different modules, the Pro feature set contains some major parts of the AWS stack (notably API Gateway v2, CloudFront, and RDS).

The setup for Localstack is pretty straight-forward. Pull the docker image and run it. The development team released a CLI tool that helps with orchestration, so what we end up running is:

$ pip install localstack

$ docker pull localstack/localstack

$ localstack start -dFor setting up Terraform to work with LocalStack, the team over there has an integration page with details, though I’ll cover some here as well.

The main thing that you need to know is that you’ll be setting some custom endpoints in your provider configuration to tell Terraform to reach out to localhost instead of AWS for the plan and apply steps.

GitHub Action

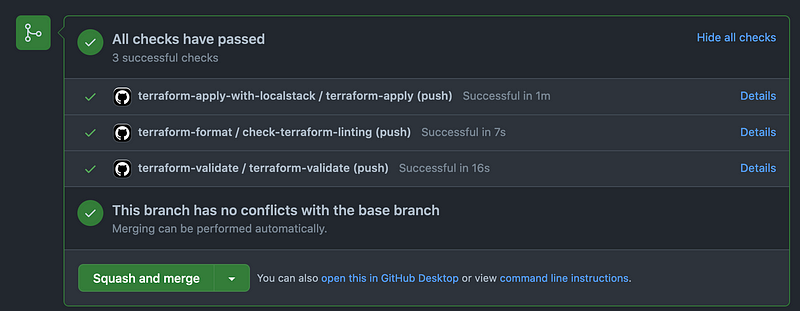

The GitHub Action workflow file really only needs to do three things:

- Installs Terraform

- Installs LocalStack CLI and starts the Docker container (See above)

- Runs

terraform apply -auto-approve

The workflow file I have does a couple other things based on personal preference, but you can really boil the file down to less than twenty lines if you really want to.

Some of the additional things I do (and why) are:

- Ignore the

mainbranch since I don’t want this to run on pushes to that branch. Only feature branches - Use ASDF and a

.tool-versionsfile to manage my Terraform version. You could also use the GitHub Action hashicorp/setup-terraform if desired - Run a

terraform planbefore the apply to make sure I can see in the logs whether a failure is a plan or apply error

Bringing It All Together

Once you get your GitHub Action workflow file setup, you just need to add a directory where you can place your tests. Inside the directory, you can have a single file where you define your provider and module block.

You’ll need to force the provider to target your Localstack endpoints instead of the live AWS endpoints. You can do that with the endpoints block:

endpoints {

apigateway = "http://localhost:4566"

apigatewayv2 = "http://localhost:4566"

cloudformation = "http://localhost:4566"

cloudwatch = "http://localhost:4566"

dynamodb = "http://localhost:4566"

ec2 = "http://localhost:4566"

es = "http://localhost:4566"

elasticache = "http://localhost:4566"

firehose = "http://localhost:4566"

iam = "http://localhost:4566"

kinesis = "http://localhost:4566"

kms = "http://localhost:4566"

lambda = "http://localhost:4566"

rds = "http://localhost:4566"

redshift = "http://localhost:4566"

route53 = "http://localhost:4566"

s3 = "http://s3.localhost.localstack.cloud:4566"

secretsmanager = "http://localhost:4566"

ses = "http://localhost:4566"

sns = "http://localhost:4566"

sqs = "http://localhost:4566"

ssm = "http://localhost:4566"

stepfunctions = "http://localhost:4566"

sts = "http://localhost:4566"After that you can open up some PRs to test your positive and negativetest cases.